Offers the functions to create and to work with a feed-forward network. More...

#include <shark/Models/FFNet.h>

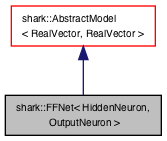

Inheritance diagram for shark::FFNet< HiddenNeuron, OutputNeuron >:

Inheritance diagram for shark::FFNet< HiddenNeuron, OutputNeuron >:Public Member Functions | |

| FFNet () | |

| std::string | name () const |

| From INameable: return the class name. More... | |

| std::size_t | inputSize () const |

| Number of input neurons. More... | |

| std::size_t | outputSize () const |

| Number of output neurons. More... | |

| std::size_t | numberOfNeurons () const |

| Total number of neurons, that is inputs+hidden+outputs. More... | |

| std::size_t | numberOfHiddenNeurons () const |

| Total number of hidden neurons. More... | |

| std::vector< RealMatrix > const & | layerMatrices () const |

| Returns the matrices for every layer used by eval. More... | |

| RealMatrix const & | layerMatrix (std::size_t layer) const |

| Returns the weight matrix of the i-th layer. More... | |

| void | setLayer (std::size_t layerNumber, RealMatrix const &m, RealVector const &bias) |

| std::vector< RealMatrix > const & | backpropMatrices () const |

| Returns the matrices for every layer used by backpropagation. More... | |

| RealMatrix const & | inputOutputShortcut () const |

| Returns the direct shortcuts between input and output neurons. More... | |

| HiddenNeuron const & | hiddenActivationFunction () const |

| Returns the activation function of the hidden units. More... | |

| OutputNeuron const & | outputActivationFunction () const |

| Returns the activation function of the output units. More... | |

| HiddenNeuron & | hiddenActivationFunction () |

| Returns the activation function of the hidden units. More... | |

| OutputNeuron & | outputActivationFunction () |

| Returns the activation function of the output units. More... | |

| const RealVector & | bias () const |

| Returns the bias values for hidden and output units. More... | |

| RealVector | bias (std::size_t layer) const |

| Returns the portion of the bias vector of the i-th layer. More... | |

| std::size_t | numberOfParameters () const |

| Returns the total number of parameters of the network. More... | |

| RealVector | parameterVector () const |

| returns the vector of used parameters inside the weight matrix More... | |

| void | setParameterVector (RealVector const &newParameters) |

| uses the values inside the parameter vector to set the used values inside the weight matrix More... | |

| RealMatrix const & | neuronResponses (State const &state) const |

| Returns the output of all neurons after the last call of eval. More... | |

| boost::shared_ptr< State > | createState () const |

| Creates an internal state of the model. More... | |

| void | evalLayer (std::size_t layer, RealMatrix const &patterns, RealMatrix &outputs) const |

| Returns the response of the i-th layer given the input of that layer. More... | |

| Data< RealVector > | evalLayer (std::size_t layer, Data< RealVector > const &patterns) const |

| Returns the response of the i-th layer given the input of that layer. More... | |

| void | eval (RealMatrix const &patterns, RealMatrix &output, State &state) const |

| void | weightedParameterDerivative (BatchInputType const &patterns, RealMatrix const &coefficients, State const &state, RealVector &gradient) const |

| void | weightedInputDerivative (BatchInputType const &patterns, RealMatrix const &coefficients, State const &state, BatchInputType &inputDerivative) const |

| virtual void | weightedDerivatives (BatchInputType const &patterns, BatchOutputType const &coefficients, State const &state, RealVector ¶meterDerivative, BatchInputType &inputDerivative) const |

| calculates weighted input and parameter derivative at the same time More... | |

| void | weightedParameterDerivativeFullDelta (RealMatrix const &patterns, RealMatrix &delta, State const &state, RealVector &gradient) const |

| Calculates the derivative for the special case, when error terms for all neurons of the network exist. More... | |

| void | setStructure (std::vector< size_t > const &layers, FFNetStructures::ConnectionType connectivity=FFNetStructures::Normal, bool biasNeuron=true) |

| Creates a connection matrix for a network. More... | |

| void | setStructure (std::size_t in, std::size_t hidden, std::size_t out, FFNetStructures::ConnectionType connectivity=FFNetStructures::Normal, bool bias=true) |

| Creates a connection matrix for a network with a single hidden layer. More... | |

| void | setStructure (std::size_t in, std::size_t hidden1, std::size_t hidden2, std::size_t out, FFNetStructures::ConnectionType connectivity=FFNetStructures::Normal, bool bias=true) |

| Creates a connection matrix for a network with two hidden layers. More... | |

| void | read (InArchive &archive) |

| From ISerializable, reads a model from an archive. More... | |

| void | write (OutArchive &archive) const |

| From ISerializable, writes a model to an archive. More... | |

Public Member Functions inherited from shark::AbstractModel< RealVector, RealVector > Public Member Functions inherited from shark::AbstractModel< RealVector, RealVector > | |

| AbstractModel () | |

| virtual | ~AbstractModel () |

| const Features & | features () const |

| virtual void | updateFeatures () |

| bool | hasFirstParameterDerivative () const |

| Returns true when the first parameter derivative is implemented. More... | |

| bool | hasSecondParameterDerivative () const |

| Returns true when the second parameter derivative is implemented. More... | |

| bool | hasFirstInputDerivative () const |

| Returns true when the first input derivative is implemented. More... | |

| bool | hasSecondInputDerivative () const |

| Returns true when the second parameter derivative is implemented. More... | |

| bool | isSequential () const |

| virtual void | eval (BatchInputType const &patterns, BatchOutputType &outputs) const |

| Standard interface for evaluating the response of the model to a batch of patterns. More... | |

| virtual void | eval (BatchInputType const &patterns, BatchOutputType &outputs, State &state) const=0 |

| Standard interface for evaluating the response of the model to a batch of patterns. More... | |

| virtual void | eval (InputType const &pattern, OutputType &output) const |

| Standard interface for evaluating the response of the model to a single pattern. More... | |

| Data< OutputType > | operator() (Data< InputType > const &patterns) const |

| Model evaluation as an operator for a whole dataset. This is a convenience function. More... | |

| OutputType | operator() (InputType const &pattern) const |

| Model evaluation as an operator for a single pattern. This is a convenience function. More... | |

| BatchOutputType | operator() (BatchInputType const &patterns) const |

| Model evaluation as an operator for a single pattern. This is a convenience function. More... | |

| virtual void | weightedParameterDerivative (BatchInputType const &pattern, BatchOutputType const &coefficients, State const &state, RealVector &derivative) const |

| calculates the weighted sum of derivatives w.r.t the parameters. More... | |

| virtual void | weightedParameterDerivative (BatchInputType const &pattern, BatchOutputType const &coefficients, Batch< RealMatrix >::type const &errorHessian, State const &state, RealVector &derivative, RealMatrix &hessian) const |

| calculates the weighted sum of derivatives w.r.t the parameters More... | |

| virtual void | weightedInputDerivative (BatchInputType const &pattern, BatchOutputType const &coefficients, State const &state, BatchInputType &derivative) const |

| calculates the weighted sum of derivatives w.r.t the inputs More... | |

| virtual void | weightedInputDerivative (BatchInputType const &pattern, BatchOutputType const &coefficients, typename Batch< RealMatrix >::type const &errorHessian, State const &state, RealMatrix &derivative, Batch< RealMatrix >::type &hessian) const |

| calculates the weighted sum of derivatives w.r.t the inputs More... | |

Public Member Functions inherited from shark::IParameterizable Public Member Functions inherited from shark::IParameterizable | |

| virtual | ~IParameterizable () |

Public Member Functions inherited from shark::INameable Public Member Functions inherited from shark::INameable | |

| virtual | ~INameable () |

Public Member Functions inherited from shark::ISerializable Public Member Functions inherited from shark::ISerializable | |

| virtual | ~ISerializable () |

| Virtual d'tor. More... | |

| void | load (InArchive &archive, unsigned int version) |

| Versioned loading of components, calls read(...). More... | |

| void | save (OutArchive &archive, unsigned int version) const |

| Versioned storing of components, calls write(...). More... | |

| BOOST_SERIALIZATION_SPLIT_MEMBER () | |

Additional Inherited Members | |

Public Types inherited from shark::AbstractModel< RealVector, RealVector > Public Types inherited from shark::AbstractModel< RealVector, RealVector > | |

| enum | Feature |

| typedef RealVector | InputType |

| Defines the input type of the model. More... | |

| typedef RealVector | OutputType |

| Defines the output type of the model. More... | |

| typedef Batch< InputType >::type | BatchInputType |

| defines the batch type of the input type. More... | |

| typedef Batch< OutputType >::type | BatchOutputType |

| defines the batch type of the output type More... | |

| typedef TypedFlags< Feature > | Features |

| typedef TypedFeatureNotAvailableException< Feature > | FeatureNotAvailableException |

Protected Attributes inherited from shark::AbstractModel< RealVector, RealVector > Protected Attributes inherited from shark::AbstractModel< RealVector, RealVector > | |

| Features | m_features |

Detailed Description

template<class HiddenNeuron, class OutputNeuron>

class shark::FFNet< HiddenNeuron, OutputNeuron >

Offers the functions to create and to work with a feed-forward network.

A feed forward network consists of several layers. every layer consists of a linear function with optional bias whose response is modified by a (nonlinear) activation function. starting from the input layer, the output of every layer is the input of the next. The two template arguments govern the activation functions of the network. The activation functions are typically sigmoidal. All hidden layers share one activation function, while the output layer can be chosen to use a different one, for example to allow the last output to be unbounded, in which case a linear output function is used. It is not possible to use arbitrary activation functions but Neurons following in the structure in Models/Neurons.h Especially it holds that the derivative of the activation function must have the form f'(x) = g(f(x)).

This network class allows for several different topologies of structure. The layer-wise structure outlined above is the default one, but the network also allows for shortcuts. most typically an input-output shortcut is used, that is a shortcut that connects the input neurons directly with the output using linear weights. But also a fully connected structure is possible, where every layer is fed as input to every successive layer instead of only the next one.

Constructor & Destructor Documentation

◆ FFNet()

|

inline |

Creates an empty feed-forward network. After the constructor is called, one version of the setStructure methods needs to be called to define the network topology.

Member Function Documentation

◆ backpropMatrices()

|

inline |

◆ bias() [1/2]

|

inline |

Returns the bias values for hidden and output units.

This is either empty or a vector of size numberOfNeurons()-inputSize(). the first entry is the value of the first hidden unit while the last outputSize() units are the values of the output units.

◆ bias() [2/2]

|

inline |

Returns the portion of the bias vector of the i-th layer.

Definition at line 190 of file FFNet.h.

References remora::subrange().

◆ createState()

|

inlinevirtual |

Creates an internal state of the model.

The state is needed when the derivatives are to be calculated. Eval can store a state which is then reused to speed up the calculations of the derivatives. This also allows eval to be evaluated in parallel!

Reimplemented from shark::AbstractModel< RealVector, RealVector >.

◆ eval()

|

inline |

Definition at line 319 of file FFNet.h.

References remora::noalias(), remora::prod(), remora::repeat(), remora::rows(), SHARK_CRITICAL_REGION, SIZE_CHECK, remora::subrange(), shark::State::toState(), and remora::trans().

◆ evalLayer() [1/2]

|

inline |

Returns the response of the i-th layer given the input of that layer.

this is useful if only a portion of the network needs to be evaluated be aware that this only works without shortcuts in the network

Definition at line 285 of file FFNet.h.

References remora::noalias(), remora::prod(), remora::repeat(), and remora::trans().

Referenced by main().

◆ evalLayer() [2/2]

|

inline |

Returns the response of the i-th layer given the input of that layer.

this is useful if only a portion of the network needs to be evaluated be aware that this only works without shortcuts in the network

Definition at line 310 of file FFNet.h.

References shark::Data< Type >::batch(), shark::Data< Type >::numberOfBatches(), and SHARK_PARALLEL_FOR.

◆ hiddenActivationFunction() [1/2]

|

inline |

◆ hiddenActivationFunction() [2/2]

|

inline |

◆ inputOutputShortcut()

|

inline |

◆ inputSize()

|

inline |

◆ layerMatrices()

|

inline |

◆ layerMatrix()

|

inline |

◆ name()

|

inlinevirtual |

From INameable: return the class name.

Reimplemented from shark::INameable.

◆ neuronResponses()

|

inline |

Returns the output of all neurons after the last call of eval.

- Parameters

-

state last result of eval

- Returns

- Output value of the neurons.

Definition at line 272 of file FFNet.h.

References shark::State::toState().

◆ numberOfHiddenNeurons()

|

inline |

◆ numberOfNeurons()

|

inline |

◆ numberOfParameters()

|

inlinevirtual |

Returns the total number of parameters of the network.

Reimplemented from shark::IParameterizable.

Definition at line 199 of file FFNet.h.

Referenced by trainProblem().

◆ outputActivationFunction() [1/2]

|

inline |

◆ outputActivationFunction() [2/2]

|

inline |

◆ outputSize()

|

inline |

◆ parameterVector()

|

inlinevirtual |

returns the vector of used parameters inside the weight matrix

Reimplemented from shark::IParameterizable.

Definition at line 209 of file FFNet.h.

References remora::noalias(), remora::subrange(), and remora::to_vector().

◆ read()

|

inlinevirtual |

From ISerializable, reads a model from an archive.

Reimplemented from shark::AbstractModel< RealVector, RealVector >.

◆ setLayer()

|

inline |

Definition at line 136 of file FFNet.h.

References remora::noalias(), SIZE_CHECK, and remora::subrange().

Referenced by main(), and unsupervisedPreTraining().

◆ setParameterVector()

|

inlinevirtual |

uses the values inside the parameter vector to set the used values inside the weight matrix

Reimplemented from shark::IParameterizable.

Definition at line 221 of file FFNet.h.

References remora::columns(), remora::noalias(), remora::subrange(), remora::to_vector(), and remora::trans().

Referenced by main().

◆ setStructure() [1/3]

|

inline |

Creates a connection matrix for a network.

Automatically creates a network with several layers, with the numbers of neurons for each layer defined by layers. layers must be at least size 2, which will result in a network with no hidden layers. the first and last values correspond to the number of inputs and outputs respectively.

The network supports three different tpes of connection models: FFNetStructures::Normal corresponds to a layerwise connection between consecutive layers. FFNetStructures::InputOutputShortcut additionally adds a shortcut between input and output neurons. FFNetStructures::Full connects every layer to every following layer, this includes also the shortcuts for input and output neurons. Additionally a bias term an be used.

While Normal and Full only use the layer matrices, inputOutputShortcut also uses the corresponding matrix variable (be aware that in the case of only one hidden layer, the shortcut between input and output leads to the same network as the Full - in that case the Full topology is chosen for optimization reasons)

- Parameters

-

layers contains the numbers of neurons for each layer of the network. connectivity type of connection used between layers biasNeuron if set to true, connections from all neurons (except the input neurons) to the bias will be set.

Definition at line 474 of file FFNet.h.

References shark::FFNetStructures::Full, shark::FFNetStructures::InputOutputShortcut, and SIZE_CHECK.

Referenced by experiment(), main(), trainProblem(), and unsupervisedPreTraining().

◆ setStructure() [2/3]

|

inline |

Creates a connection matrix for a network with a single hidden layer.

Automatically creates a network with three different layers: An input layer with in input neurons, an output layer with out output neurons and one hidden layer with hidden neurons, respectively.

- Parameters

-

in number of input neurons. hidden number of neurons of the second hidden layer. out number of output neurons. connectivity Type of connectivity between the layers bias if set to true, connections from all neurons (except the input neurons) to the bias will be set.

◆ setStructure() [3/3]

|

inline |

Creates a connection matrix for a network with two hidden layers.

Automatically creates a network with four different layers: An input layer with in input neurons, an output layer with out output neurons and two hidden layers with hidden1 and hidden2 hidden neurons, respectively.

- Parameters

-

in number of input neurons. hidden1 number of neurons of the first hidden layer. hidden2 number of neurons of the second hidden layer. out number of output neurons. connectivity Type of connectivity between the layers bias if set to true, connections from all neurons (except the input neurons) to the bias will be set.

◆ weightedDerivatives()

|

inlinevirtual |

calculates weighted input and parameter derivative at the same time

Sometimes, both derivatives are needed at the same time. But sometimes, when calculating the weighted parameter derivative, the input derivative can be calculated for free. This is for example true for the feed-forward neural networks. However, there exists the obvious default implementation to just calculate the derivatives one after another.

- Parameters

-

patterns the patterns to evaluate coefficients the coefficients which are used to calculate the weighted sum state intermediate results stored by eval to sped up calculations of the derivatives parameterDerivative the calculated parameter derivative as sum over all derivates of all patterns inputDerivative the calculated derivative for every pattern

Reimplemented from shark::AbstractModel< RealVector, RealVector >.

Definition at line 407 of file FFNet.h.

References remora::noalias(), remora::rows(), SIZE_CHECK, and remora::trans().

◆ weightedInputDerivative()

|

inline |

Definition at line 389 of file FFNet.h.

References remora::noalias(), remora::rows(), SIZE_CHECK, and remora::trans().

◆ weightedParameterDerivative()

|

inline |

Definition at line 371 of file FFNet.h.

References remora::noalias(), remora::rows(), SIZE_CHECK, and remora::trans().

◆ weightedParameterDerivativeFullDelta()

|

inline |

Calculates the derivative for the special case, when error terms for all neurons of the network exist.

This is useful when the hidden neurons need to meet additional requirements. The value of delta is changed during computation and holds the results of the backpropagation steps. The format is such that the rows of delta are the neurons and the columns the patterns.

Definition at line 437 of file FFNet.h.

References SIZE_CHECK, and shark::State::toState().

◆ write()

|

inlinevirtual |

From ISerializable, writes a model to an archive.

Reimplemented from shark::AbstractModel< RealVector, RealVector >.

Definition at line 597 of file FFNet.h.

References remora::noalias(), remora::prod(), remora::row(), remora::rows(), SIZE_CHECK, remora::subrange(), remora::sum(), remora::to_matrix(), shark::State::toState(), and remora::trans().

The documentation for this class was generated from the following file:

- include/shark/Models/FFNet.h