Denoising Autoencoders¶

This tutorial builds up on the previous Autoencoders tutorial. It is recommended to start with that article if you are not familiat with autoencoders as implemented in Shark.

In this tutorial we will have a closer look at denoising autoencoders [VincentEtAl08]. The idea behind them is to change the standard autoencoder problem to

where \(\epsilon\) is a new noise term which corrupts the input. While the standard autoencoder problem is to find the parameter vector \(\theta\) for the model that maps the input best to the output, the goal is now to reconstruct the original data point. This makes sense intuitively: when corrupting single pixels of an image, we are still able to recognize what the image depicts, even with a large amount of noise. It also makes sense from an optimisation view: with big intermediate representations of the autoencoder it becomes more and more likely that single neurons specialise on single input images. This happens most of the time by focussing on a certain combination of outlier pixels. This is classical overfitting. Adding noise on these outliers prevents overfitting on these inputs and more stable features are instead prefered.

The following includes are needed for this tutorial:

#include <shark/Data/Pgm.h> //for exporting the learned filters

#include <shark/Data/SparseData.h>//for reading in the images as sparseData/Libsvm format

#include <shark/Models/Autoencoder.h>//normal autoencoder model

#include <shark/Models/TiedAutoencoder.h>//autoencoder with tied weights

#include <shark/Models/ConcatenatedModel.h>//to concatenate the noise with the model

#include <shark/ObjectiveFunctions/ErrorFunction.h>

#include <shark/Algorithms/GradientDescent/Rprop.h>// the Rprop optimization algorithm

#include <shark/ObjectiveFunctions/Loss/SquaredLoss.h> // squared loss used for regression

#include <shark/ObjectiveFunctions/Regularizer.h> //L2 regulariziation

Training Denoising Autoencoders¶

There is not much to explain here as we can build op on the code of the previous tutorial. Thus we will only show the differences in the model definition.

We will enhance the previously created function by another input the parameter, the strength of noise:

template<class AutoencoderModel>

AutoencoderModel trainAutoencoderModel(

UnlabeledData<RealVector> const& data,//the data to train with

std::size_t numHidden,//number of features in the autoencoder

std::size_t iterations, //number of iterations to optimize

double regularisation,//strength of the regularisation

double noiseStrength // strength of the added noise

){

In our chosen noise model, this will be the probability that the pixel is set to 0. This makes sense in our application on the MNIST dataset as the pixels are binary and 0 is the background. Thus the noise will corrupt how the digits will look like. This type of noise is represented by the ImpulseNoiseModel, the only thing we have to do is to concatenate it with the input of our trained autoencoder model and use the combined model in the ErrorFunction:

//create the model

std::size_t inputs = dataDimension(data);

AutoencoderModel baseModel;

baseModel.setStructure(inputs, numHidden);

initRandomUniform(baseModel,-0.1*std::sqrt(1.0/inputs),0.1*std::sqrt(1.0/inputs));

NoiseModel noise(noiseStrength);//set an input pixel with probability p to 0

ConcatenatedModel<RealVector,RealVector> model = noise>> baseModel;

//we have not implemented the derivatives of the noise model which turns the

//whole composite model to be not differentiable. we fix this by not optimizing the noise model

model.enableModelOptimization(0,false);

That’s it. We can now re-run the previous experiments for the denoising autoencoder:

typedef Autoencoder<LogisticNeuron, LogisticNeuron> Autoencoder1;

typedef TiedAutoencoder<LogisticNeuron, LogisticNeuron> Autoencoder2;

Autoencoder1 net1 = trainAutoencoderModel<Autoencoder1>(train.inputs(),numHidden,iterations,regularisation,noiseStrengt);

Autoencoder2 net2 = trainAutoencoderModel<Autoencoder2>(train.inputs(),numHidden,iterations,regularisation,noiseStrengt);

exportFiltersToPGMGrid("features1",net1.encoderMatrix(),28,28);

exportFiltersToPGMGrid("features2",net2.encoderMatrix(),28,28);

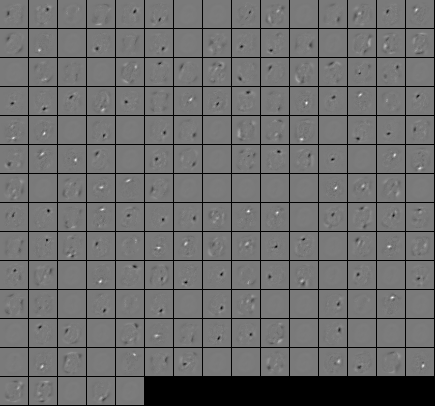

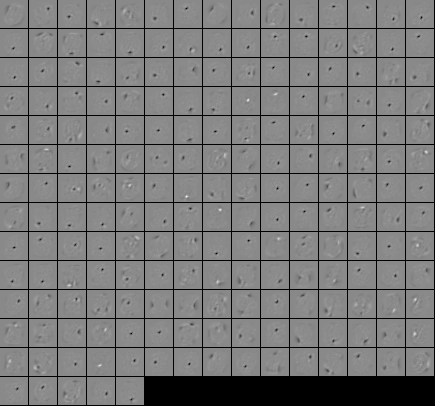

Visualizing the autoencoder¶

After training the different architectures, we can print the feature maps.

Normal autoencoder:

Autoencoder with tied weights:

Full example program¶

The full example program is DenoisingAutoencoderTutorial.cpp.

Attention

The settings of the parameters of the program will reproduce the filters. However the program takes some time to run! This might be too long for weaker machines.

References¶

| [VincentEtAl08] | P. Vincent, H. Larochelle Y. Bengio, and P. A. Manzagol. Extracting and Composing Robust Features with Denoising Autoencoders, Proceedings of the Twenty-fifth International Conference on Machine Learning (ICML‘08), pages 1096-1103, ACM, 2008. |