PhD Course

Information Geometry in Learning and Optimization

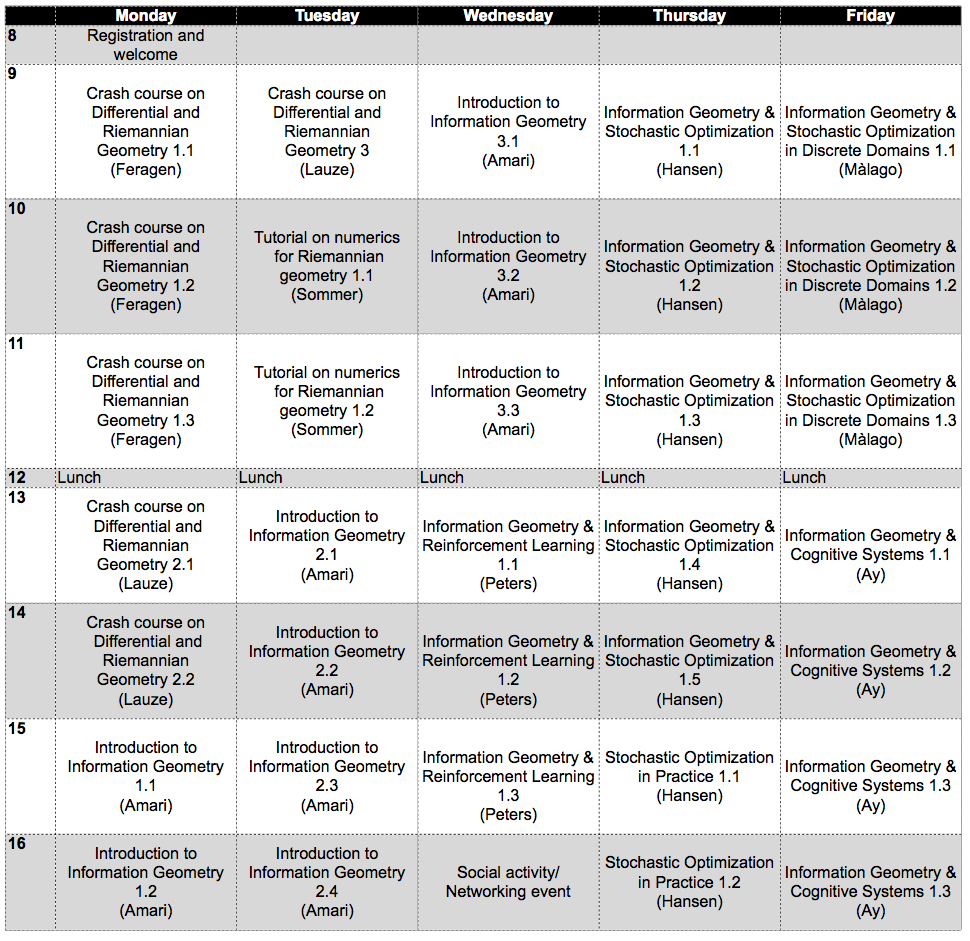

Basic information — Lectures — Practical sessions — BackgroundPreliminary program

Click here for the preliminary program in pdf format.

Social activities

- Monday: Pizza 17:15. Leaving for Vor Fbyrue Plads metro at 18:20. Guided tour of the old university including dungeons at 19:00.

- Wednesday: Bus to Nyhavn at 15:20, boat tour 16:00-17:00, bus to Nørrebro bryghus (NB) 17:20. Guided tour of NB at 18:00, dinner at NB 19:00.

Lectures overview

Contents of Lectures by Shun-ichi Amari

Click on main headings for slides.-

Introduction to Information Geometry - without knowledge on

differential geometry

- Divergence function on a manifold

- Flat divergence and dual affine structures with Riemannian metric derived from it

- Two types of geodesics and orthogonality

- Pythagorean theorem and projection theorem

- Examples of dually flat manifold: Manifold of probability distributions (exponential families), positive measures and positive-definite matrices

- Geometrical Structure Derived from Invariance

- Invariance and information monotonicity in manifold of probability distributions

- f-divergence : unique invariant divergence

- Dual affine connections with Riemannian metric derived from divergence: Tangent space, parallel transports and duality

- Alpha-geometry induced from invariant geometry

- Geodesics, curvatures and dually flat manifold:

- Canonical divergence: KL- and alpha-divergence

- Applications of Information Geometry to Statistical Inference

- Higher-order asymptotic theory of statistical inference – estimation and hypothesis testing

- Neyman-Scott problem and semiparametric model

- em (EM) algorithm and hidden variables

- Applications of Information Geometry to Machine Learning

- Belief propagation and CCCP algorithm in graphical model

- Support vector machine and Riemannian modification of kernels

- Bayesian information geometry and geometry of restricted Boltzmann machine: Towards deep learning

- Natural gradient learning and its dynamics: singular statistical model and manifold

- Clustering with divergence

- Sparse signal analysis

- Convex optimization

- Amari, Shun-Ichi. Natural gradient works efficiently in learning. Neural Computation 10, 2 (1998): 251-276.

- Amari, Shun-ichi, and Hiroshi Nagaoka. Methods of information geometry. Vol. 191. American Mathematical Soc., 2007.

Contents of Lectures by Nihat Ay

-

Differential Equations:

- Vector and Covector Fields

- Fisher-Shahshahani Metric, Gradient Fields

- m- and e-Linearity of Differential Equations

- Applications to Evolution:

- Lotka-Volterra and Replicator Differential Equations

- "Fisher's Fundamental Theorem of Natural Selection"

- The Hypercycle Model of Eigen and Schuster

- Applications to Learning:

- Information Geometry of Conditional Models

- Amari's Natural Gradient Method

- Information-Geometric Design of Learning Systems

Contents of Lectures by Nikolaus Hansen

- A short introduction to continuous optimization

- Continuous optimization using natural gradients

- The Covariance Matrix Adaptation Evolution Strategy (CMA-ES)

- A short introduction into Python (practice session, see also here)

- A practical approach to continuous optimization using cma.py (practice session)

- Hansen, Nikolaus. The CMA Evolution Strategy: A Tutorial, 2011

- Ollivier, Yann, Ludovic Arnold, Anne Auger, and Nikolaus Hansen. Information-Geometric Optimization Algorithms: A Unifying Picture via Invariance Principles. arXiv:1106.3708

Contents of Lectures by Jan Peters

Suggested reading:- Peters, Jan, and Stefan Schaal. Natural actor critic. Neurocomputing 71, 7-9 (2008):1180-1190

Contents of Luigi Malagò

Stochastic Optimization in Discrete Domains- Stochastic Relaxation of Discrete Optimization Problems

- Information Geometry of Hierarchical Models

- Stochastic Natural Gradient Descent

- Graphical Models and Model Selection

- Examples of Natural Gradient-based Algorithms in Stochastic Optimization

Suggested reading:

- Amari, Shun-Ichi. Information geometry on hierarchy of probability distributions IEEE Transactions on Information Theory 47, 5 (2001):1701-1711

Contents of Lectures by Aasa Feragen and François Lauze

-

Aasa's lectures

- Recap of Differential Calculus

- Differential manifolds

- Tangent space

- Vector fields

- Submanifolds of R^n

- Riemannian metrics

- Invariance of Fisher information metric

- If time: Metric geometry view of Riemannian manifolds, their curvature and consequences thereof

-

François's lectures

- Riemannian metrics

- Gradient, gradient descent, duality

- Distances

- Connections and Christoffel symbols

- Parallelism

- Levi-Civita Connections

- Geodesics, exponential and log maps

- Fréchet Means and Gradient Descent

Suggested reading:

- Sueli I. R. Costa, Sandra A. Santos, and Joao E. Strapasson. Fisher information distance: a geometrical reading. arXiv:1106.3708

Contents of Tutorial by Stefan Sommer

In the tutorial on numerics for Riemannian geometry on Tuesday morning, we will discuss computational representations and numerical solutions of some differential geometry problems. The goal is to be able to implement geodesic equations numerically for simple probability distributions, to visualize the computed geodesics, to compute Riemannian logarithms, and to find mean distributions. We will follow the presentation in the paper Fisher information distance: a geometrical reading from a computational viewpoint.

The tutorial is based on an ipython notebook that is available here. Please

click here for details.