Introduction:

This page contains a short presentation of the work Jakob Sloth and Søren Hauberg

did as part of their Master's Thesis. The key problem of the thesis was to

produce a theatrical play in which computers acts autonomously on stage

with a human actor. Specifically a robot acted on stage, images were projected

on stage, and sound was computer controlled. All of this was done autonomously.

The idea was to control the computer actors by observing the behaviour of the

human actor. Specifically the gestures of the human actor were used to control

the computer actors. As in any play the story is known in advance. This means

that the behaviour of the human actor can be used to estimate 'the current position'

in the manuscript. To make this estimation as robust as possible we used

statistics to model the process. In doing this we came up with a new way to

encode duration and order of states in hidden Markov models.

The actual play was based on a book for children. It lasts approximately 10 minutes

and contains about 70 different computer actions.

It should be noted that this work was inspired by the excellent work by Pinhanez

and Bobick, [ref].

|

Page contents:

Download Papers:

The Master Thesis - Manuskriptbaseret opførsel i et dynamisk miljø¸, 2007. [pdf] (in Danish)

An Efficient Algorithm for Modelling Duration in Hidden Markov Models, with a Dramatic Application. In ``Journal of Mathematical Imaging and Vision, Tribute to Peter Johansen'', 2008. [pdf]

|

The play is based on parts of the childrens book 'Troels Jorns bog

om den sultne løve · den glade elephant · den lille mus · og Jens

Pismyre' written by the famous danish artist Asger Jorn. The story take

place on the savanna where we meet the hungry and therefore very angry

lion. The lion is looking for food to eat and it sees a

giraffe. The giraffe gets very scared but tricks the lion into eat leaves.

The giraffe runs away and the lion is now even more angry. Then it meets a

nice and juicy pig and it sneaks up on it to make sure that the pig doesn't hide.

The pig gets very scared but it manages to trick the lion into eating grass.

Meanwhile it hides behind a stone. By now the lion is furious but fortunately

it meets a big elephant. Since the lion isn't all that intelligent it tries to

eat the elephant. But the elephant just laughs and throws the lion up in the skies.

The story ends with a singing elephant and a very flat lion.

As you can tell, this is a very dramatic story, so if you haven't read the book

you really should!

|

The childrens book |

The Actors:[top]

Name: The Pig

Name: The Pig

Species: pig

Gender : male

Age : 2 years

Eye color: black

Favourite food: grass

Creator: Maria Sloth

|

Name: Jumbo

Name: Jumbo

Species: elephant

Gender: female

Age: 30 years

Eye color: black

Favourite food: fruits

Creator: Maria Sloth

|

Name: The Giraffe

Name: The Giraffe

Species: giraffe

Gender: male

Age: 5 years

Eye color: black

Favourite food: leaves

Creator: Maria Sloth

|

Name: The Lion

Name: The Lion

Species: lion

Gender: male

Age: 30 years

Eye color: blue

Favourite food: raw meat

Creator: Jane and Rasmus Sloth

|

Gesture recognition:[top]

In order for the computer actors to participate in the play autonomously it is

necessary to know how far the play has progressed. To figure this out, we use

a system for visual gesture recognition.

This system is based on 'Motion History Images' (MHI) as described by

Davis and Bobick[MHI]. An MHI is a summary of a sequence of images generated using a decay operator.

The resulting images are easily interpreted by humans, which (from a practical

point of view) is a very nice property. To perform the actual recognition we

compute global image features in the MHI's and match these to a database of

known gestures. Davis and Bobick suggested that Hu moments could be used, but

we found that Zernike moments improved the recognition greatly.

In the actual play, we recognised 13 different gestures. The corresponding MHI's

are:

|

|

An MHI - a jumping human |

|

|

|

|

|

|

|

|

|

|

|

|

|

The different gestures that can be recognised. |

|

Background subtraction:[top]

The MHI's are computed from a sequence of binary images of the human actor.

To create these binary images we use a geometry based background subtraction

that is based on the work of Ivanov and Bobick [ref].

This method has the nice property that it is independent of the lighting on

stage. In the play we project images on stage which means that the light changes

quite a bit. This makes the lighting independence quite important. The downside

is that the method requires 3 calibrated cameras.

The following images illustrate the basic idea of the method. We assume that

the geometry of the stage is fixed. This has the consequence that we can warp

one image into the coordinate system of a different camera. This allows us to

compute a difference image, where the parts of the image that isn't part of

the stage geometry will stand out. From this it is easy to compute the final

binary image.

|

|

|

|

|

Image from reference camera. |

Transformed image. |

Absolute difference. |

The final binary image. |

The basic idea is to use the gesture recognition to execute the actions of

the computer actors. To make this process as robust as possible we use a

statistical model of the manuscript of the play. Using this model it is possible

to compute the probability of each computer action, and when this is large

enough we perform the action.

The model is a simple extension of the standard Hidden discrete Markov Model

(HMM) that is often used. The extension allows us to encode the order and expected

duration of states in a simple yet efficient way. The model can be implemented

using the optimal particle filter, which makes it extremely robust. For

details on this quite general model see our paper (see above).

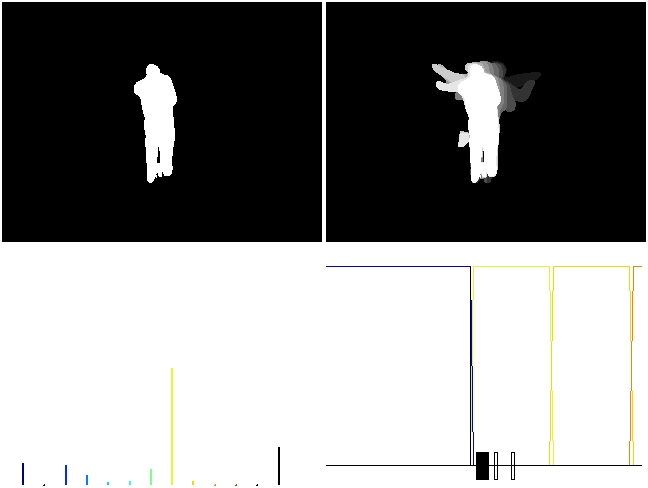

The model has the nice property that it can easily be visualised. We developed

a tool that allowed us to visualise the state of the model in real-time. A

movie that illustrates this visualisation can be seen below. However, first

a short explanation is in order.

|

|

To the right you can see one frame from the movie (click to enlarge the image).

As you can see, the frame consists of four images. The top left image shows

the binary image produced by the background subtraction. The top right image

shows the corresponding MHI. The bottom left shows the probabilities of each

of the 13 gestures (this is the input to the model). The bottom right image

illustrates the model. The particles are drawn in the bottom of these images.

The 'boxes' are the states of the system. The colors of these boxes correspond

to the colors used in the bottom left image.

|

|

|

Example of visualisation.

|

Watch Movie:

Model visualisation - duration is 10 min [Movie 31 Mb]

|

Source Code:[top]

Here we provide source code for GNU Octave 3.0 that implements

the extension of HMM's for duration modelling.

Source code:

Coming real soon -- we promise :-)

|

|